The X-Robots-Tag is an HTTP header response used by website owners to control how search engines index and handle content on a website.

It provides directives to search engine bots such as ‘noindex’ or ‘nofollow’.

The X-Robots-Tag gives you more control over what pages and content get indexed and served in Google’s search results.

The X-Robots-Tag works similarly to the meta robots tag. But there are a couple of big differences in how it applies.

Let me explain:

The X-Robots-Tag works for all files on a website (like images, PDFs, videos etc) and is set up on the website’s server.

The robots meta tag only works for individual web pages and is added inside the page’s HTML code.

The bottom line is this…

The X-Robots-Tag gives you more control over how search engines handle all your site’s content.

But it’s also a bit more complicated to set up.

✅ How to increase your traffic in 28 days. Click Here Now.

What Will I Learn?

The X-Robots-Tag is important because it gives website owners more control over how search engines crawl and index non-HTML files and documents.

You can also apply directives on a site-wide level, meaning you have one directive that applies to specific file types on your site.

Let me give you an example:

Let’s say you have a PDF download as gated content. Users must put in their email address before accessing the PDF document.

You don’t want Google to index the PDF file and show it in the Google search results. That would defeat the purpose of making people opt-in to access it.

But a simple robots meta tag won’t help you because the PDF isn’t an HTML file.

That’s where the X-Robots-Tag can help.

You simply add the X-Robots-Tag to the page’s HTTP response and Google will know not to index the PDF file.

Quick and easy!

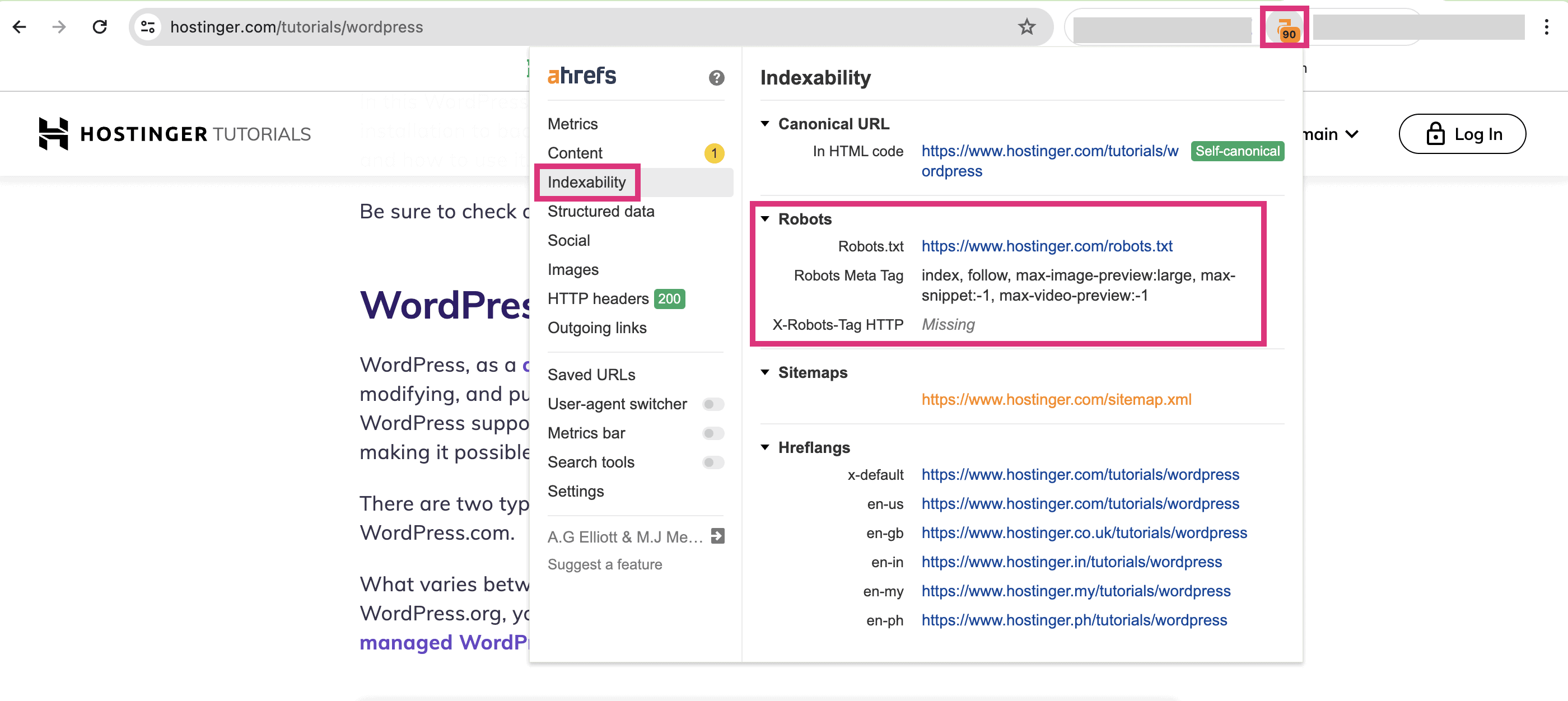

The easiest way to check for an X-Robots-Tag is by using the Ahrefs free Chrome extension.

Install the Ahrefs Chrome extension and load the URL you want to inspect.

Then, click on “Indexability” and under Robots, look for X-Robots-Tag HTTP.

This will quickly tell you if an X-Robots-Tag directive is on the page.

Here’s a list of the most common directives that can be used in an x-robots-tag:

As you can see many of the x-robot-tag directives are very specific.

You can control individual elements on a page and even stop content from being indexed after a certain period of time.

This is why the x-robots-tag is an essential tool for any website owner.

To set up an X-Robots-Tag you need to configure your web server to add the tag in the HTTP header for the specific file.

Keep in mind that adding an x-robots-tag to your site will take a little bit of technical knowledge.

Here are more detailed steps:

Locate the .htaccess file in your website’s root folder and open it with a plain text editor like Notepad.

Add the X-Robots-Tag directive which should look similar to the following example:

<FilesMatch “.(pdf)$”>

Header set X-Robots-Tag “noindex, nofollow”

</FilesMatch>

In this example, the tag applies the noindex and nofollow directive to PDF files. Simply add more directives by separating them with a comma.

Save your changes and restart Apache to apply them using the following command:

sudo service apache2 restart

Find and open the nginx.conf file with a text editor.

Add the following add_header directive within a location block.

For example:

location ~* .(pdf)$ {

add_header X-Robots-Tag “noindex, nofollow”;

}

This sets the X-Robots-Tag to noindex and nofollow directive to PDF files.

Now save your changes and reload the Nginx to active them:

sudo service nginx reload

Remember: Always back up configuration files before making changes.

You should also carefully test before applying new directives to ensure they are working properly.

✅ How to increase your traffic in 28 days. Click Here Now.